.png)

Offshore Prompt Engineer Hiring Guide: How to Build AI Expertise that Scales

.png)

As conversational AI, chatbots, and generative models reshape business operations, Prompt Engineers have emerged as one of the most in-demand roles in the AI ecosystem. They are the creative and analytical minds behind how large language models (LLMs) such as GPT-4, Claude, Gemini, and Llama understand, interpret, and execute human instructions.

A skilled prompt engineer combines linguistic intuition with technical precision — crafting queries, workflows, and templates that extract high-quality, context-aware responses from AI systems. These professionals don’t just write prompts; they engineer the bridge between human intent and machine intelligence, shaping how AI agents think, respond, and evolve.

However, finding such hybrid talent locally can be both challenging and expensive. The demand for experts who understand both natural language and AI system design far exceeds supply in many mature markets. That’s why forward-thinking organisations increasingly hire offshore prompt engineers — to accelerate AI adoption, manage costs effectively, and tap into global creative-technical expertise.

This guide explains everything you need to know — from understanding the prompt engineering role to evaluating, hiring, and managing offshore experts who can transform LLMs into production-ready business solutions.

AI education and training ecosystems in countries like India, the Philippines, and Eastern Europe are rapidly advancing. These regions are producing a new generation of engineers skilled in:

Offshore hiring gives you access to a diverse, multilingual, and innovation-driven talent pool that brings unique cultural and linguistic perspectives — essential for building AI systems that interact naturally with global users.

Hiring a qualified prompt engineer in the UK, US, or Australia can cost upwards of £100,000–£150,000 per year, excluding recruitment overheads and benefits. By contrast, offshore engineers offer comparable expertise at 40–70% lower cost, enabling you to scale experimentation and product development without overextending your budget.

This cost advantage allows startups and enterprises alike to maintain larger, cross-functional AI teams — including prompt engineers, ML developers, and automation specialists — for the same investment as one local hire.

With offshore teams distributed across time zones, your AI projects no longer stop when your local team logs off. This creates a continuous development loop, ideal for:

This “follow-the-sun” model ensures faster turnaround times, uninterrupted progress, and more agile response to evolving AI challenges.

Offshore prompt engineers don’t just provide additional manpower — they multiply innovation velocity. By combining your in-house product and domain knowledge with their LLM expertise, you can rapidly develop and deploy generative-AI capabilities such as:

This blended approach shortens your time-to-value and helps transform AI from a cost centre into a strategic capability.

A Prompt Engineer designs, tests, and optimises the instructions that guide large language models (LLMs) — such as GPT-4, Claude, Gemini, and Llama — to deliver accurate, context-aware, and task-specific responses.

This role blends linguistic insight, analytical reasoning, and technical implementation, sitting at the intersection of language, logic, and code.

While traditional software engineers write code to solve problems, prompt engineers write instructions that teach AI to reason and respond like a domain expert. They translate human intent into machine-understandable logic — ensuring outputs are not just syntactically correct, but semantically meaningful and aligned with business goals.

A skilled prompt engineer’s daily work involves a combination of creative experimentation, structured testing, and data-driven optimisation. Their key responsibilities include:

Prompt Engineers rely on a range of platforms, frameworks, and programming languages that enable them to experiment, deploy, and manage AI interactions at scale.

Common tools include:

A strong prompt engineer doesn’t just understand how to write better instructions — they understand how models think. They act as both linguists and system designers, continuously improving how AI systems interpret human context, follow logic, and generate useful, safe, and brand-aligned outputs.

Hiring a prompt engineer isn’t just about finding someone who can write clever questions for an AI model. It’s about finding a hybrid thinker — part linguist, part programmer, part systems designer — who can turn abstract business goals into AI-driven, measurable outcomes.

Below are the core technical, analytical, and creative competencies that distinguish a high-performing prompt engineer from a general AI practitioner.

Prompt engineers must deeply understand language structure, tone, and intent. They should be able to craft instructions that are clear, concise, and unambiguous — avoiding confusion that can lead to model “hallucinations.”

Look for:

This skill ensures that the model produces outputs that are not just technically correct but contextually aligned with user expectations — whether in customer support, marketing, or product communication.

An effective prompt engineer understands how large language models work under the hood — including tokenisation, temperature control, context windows, and reasoning limitations. They should know how different model families (e.g., GPT, Claude, Gemini, Mistral, Llama) interpret instructions and how to adjust prompts for performance, safety, and accuracy.

Look for familiarity with:

Prompt engineering is an iterative, data-informed process. The best engineers are comfortable with both qualitative and quantitative evaluation — using feedback loops, performance metrics, and A/B testing to improve outputs.

They should be able to:

This analytical rigor ensures that AI systems evolve through evidence-based refinement rather than intuition alone.

Since prompt engineers often collaborate with AI developers and software engineers, they should possess basic coding and API integration skills to operationalise their work.

Common technical competencies include:

This technical fluency allows them to move beyond static prompts — building dynamic, data-connected AI systems that can perform complex reasoning and knowledge retrieval.

Prompt engineering is still an emerging discipline. The best practitioners have a scientific mindset combined with creative flexibility. They’re willing to experiment, fail fast, and iterate continuously to uncover optimal model behaviour.

Indicators of this skill include:

In essence, prompt engineers who thrive are those who treat AI not as a black box — but as a collaborative, evolving system that responds to design and experimentation.

As AI becomes more embedded in business operations, prompt engineers must also consider data privacy, bias mitigation, and compliance standards. Look for candidates who understand:

A responsible prompt engineer ensures that innovation happens safely, ethically, and in alignment with enterprise governance.

In short, the ideal prompt engineer blends the analytical mindset of a data scientist, the fluency of a linguist, and the adaptability of a creative technologist — making them one of the most strategic hires in your AI transformation journey.

Hiring an offshore prompt engineer requires a balance between technical evaluation and creative judgment. Unlike traditional developer roles, prompt engineering blends cognitive, linguistic, and design-thinking skills — which means standard coding interviews often fail to capture true capability. The key is to build an evaluation process that measures not only how candidates understand AI models, but also how they guide them to produce consistent, business-ready outputs.

Before you begin sourcing candidates, clarify why you need a prompt engineer and what outcomes you expect.

For example:

Documenting your goals helps identify whether you need a generalist prompt engineer (for broad experimentation) or a specialist (focused on a specific domain, like healthcare, SaaS, or finance).

This clarity will shape your job description, screening process, and evaluation benchmarks.

Global talent hubs such as India, the Philippines, Eastern Europe, and Latin America are rapidly becoming strong sources of AI-specialised talent.

You can find offshore prompt engineers through:

However, partnering with an established offshore hiring provider is often more reliable for long-term roles, ensuring compliance, payroll, data protection, and ongoing performance management.

A robust evaluation process for prompt engineers should include four layers of assessment: linguistic clarity, technical reasoning, applied creativity, and problem-solving.

Ask candidates to design prompts for a specific business scenario, such as:

“Create a prompt that summarises a customer complaint email in a neutral, professional tone.”

or

“Design a multi-turn prompt that helps an AI agent qualify inbound sales leads.”

Evaluate based on:

Have the candidate work directly with a live LLM (e.g., GPT-4 or Claude) to demonstrate their iterative process — how they refine prompts based on output feedback. This shows their analytical thinking and understanding of model behaviour in real time.

Include lightweight programming or integration exercises to confirm familiarity with APIs, JSON formatting, or frameworks like LangChain or PromptLayer.

For example:

“Create a simple Python script that calls an OpenAI endpoint with dynamic prompt inputs.”

Use scenario-based questions to assess critical thinking, such as:

This step helps you gauge how well they understand the relationship between prompt logic, model performance, and user experience.

When scoring candidates, assess across the following dimensions:

.png)

To get the most from your offshore hires, build an environment that supports communication, experimentation, and feedback loops:

When managed effectively, offshore prompt engineers can drive continuous AI improvement — turning early-stage LLM experiments into reliable, scalable enterprise systems.

By combining local domain knowledge with offshore prompt engineering expertise, your organisation gains:

In short, offshore prompt engineers become the architects of your AI capability — converting static models into evolving systems that learn, adapt, and deliver business value every day.

Hiring offshore prompt engineers is only the first step. To unlock their full potential, you must build a structured onboarding and collaboration framework that aligns them with your product goals, data standards, and AI ethics policies.

A well-managed onboarding process doesn’t just help new engineers understand your systems — it empowers them to contribute creatively and strategically from day one.

The onboarding phase sets the tone for productivity and quality. Treat it as an AI immersion program, not just an orientation.

a) Share Core Objectives and Use Cases

Help engineers understand the “why” behind your AI initiatives — the business problem, user personas, and expected outcomes. This contextual clarity is vital for writing prompts that reflect your organisation’s tone and values.

b) Provide Technical Environment Access Early

Ensure they have access to:

This eliminates setup delays and allows immediate engagement with real workflows.

c) Share Brand and Communication Guidelines

Because prompt engineers often work on customer-facing applications, they must understand your brand tone, compliance requirements, and conversation principles. Provide guidelines on:

Prompt engineering thrives on rapid experimentation and data-driven refinement. The faster your feedback loop, the faster your models improve.

a) Create Shared Evaluation Metrics: Define what “good” looks like for your AI outputs — accuracy, tone consistency, factual correctness, or compliance safety.

b) Use Structured Feedback Loops: Implement weekly model review sessions or async comments within shared docs. Encourage developers, designers, and business teams to annotate examples of good and bad outputs.

c) Track Progress Visibly: Use prompt versioning tools like PromptLayer or LangChain’s tracing to maintain transparency. A visible trail of improvement boosts accountability and learning.

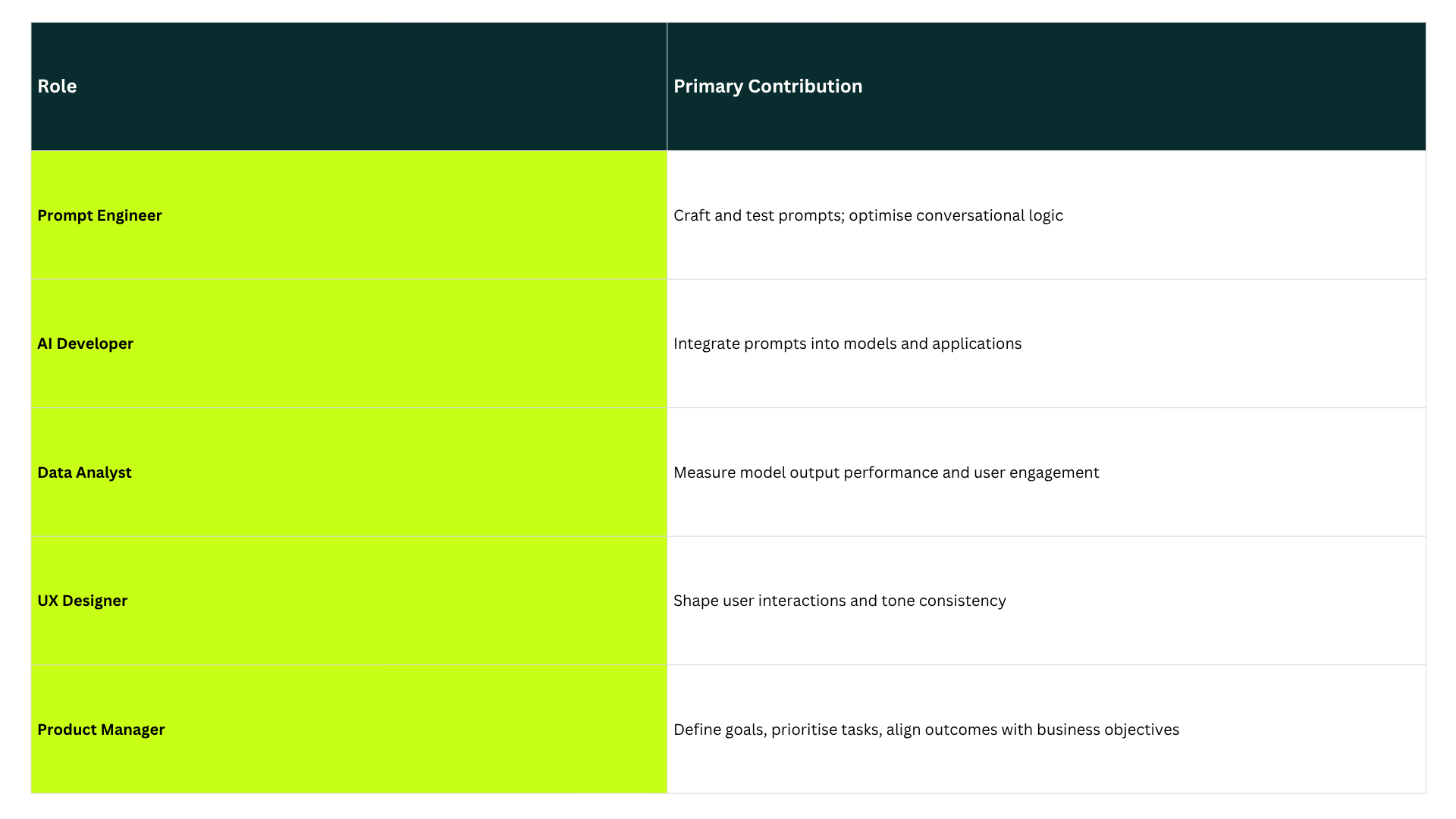

Prompt engineers work best when embedded within cross-functional AI pods — teams that combine technical, design, and domain expertise.

Recommended structure:

Encouraging collaboration ensures prompt engineers design with user experience in mind, not just technical accuracy.

As your AI team scales, documentation becomes the foundation for consistency and scalability.

Best practices include:

A well-documented knowledge base ensures that new offshore engineers can onboard quickly and contribute without reinventing previous work.

Given the pace of innovation in AI, even the best engineers need ongoing learning opportunities. Encourage offshore teams to stay updated through:

Investing in continuous learning strengthens retention, loyalty, and innovation.

Because prompt engineers frequently work with sensitive text and data, security and compliance protocols must be embedded into workflows.

Implement:

A compliant environment not only builds client trust but also protects your organisation’s intellectual property.

Once your offshore prompt team matures, focus on process automation and performance measurement:

Scaling isn’t about adding more people — it’s about multiplying efficiency through structure, tooling, and insight.

Finally, empower offshore prompt engineers to own outcomes, not just tasks.

Encourage them to:

When treated as partners rather than outsourced labour, offshore engineers evolve into strategic contributors driving your AI roadmap forward.

A high-performing offshore prompt engineering team doesn’t emerge by chance — it’s built through clarity, collaboration, and continuous iteration. By combining structured onboarding, shared metrics, secure workflows, and a culture of innovation, organisations can transform offshore AI talent into a scalable engine for creativity and intelligence.

While the potential of offshore prompt engineering is enormous, organisations often face practical challenges when operationalising it — especially as LLMs evolve faster than most traditional development disciplines. Below are some of the most common obstacles companies encounter when hiring, managing, and scaling offshore prompt engineers — along with proven strategies to overcome them.

The Challenge:

The world of Large Language Models (LLMs) is moving at breakneck speed. New models, frameworks, and fine-tuning techniques are released almost monthly. This creates constant uncertainty around which model or configuration delivers the best performance for a given use case.

Many organisations struggle to keep pace — especially when internal teams are stretched thin or lack in-house AI R&D capability. Offshore engineers, if not chosen wisely, may also become outdated if they rely solely on static training data or older frameworks.

The Solution:

Hire adaptable, experiment-driven engineers who treat learning as part of their daily workflow. When evaluating candidates, prioritise curiosity and experimentation over years of experience with a single tool.

Practical steps to mitigate this challenge include:

This creates a culture of continuous learning that keeps your team aligned with cutting-edge model capabilities.

The Challenge:

One of the biggest technical limitations of current LLMs is their limited context window. When dealing with multi-turn conversations or long documents, models can easily “forget” earlier parts of the interaction — leading to inconsistent or incorrect responses. This issue is amplified in enterprise workflows where prompts rely on previous user inputs, external data, or long knowledge bases.

The Solution:

To mitigate context loss, implement structured prompt chaining and memory management systems. Offshore prompt engineers should be skilled in building pipelines that preserve and reintroduce relevant information dynamically.

Recommended practices:

By systematising memory and chaining, teams can ensure consistent AI behaviour even in long, complex conversations.

The Challenge:

Prompt engineering often involves exposure to sensitive text — internal communications, customer records, or proprietary data. Offshore setups can introduce perceived or real risks around data handling, IP protection, and regulatory compliance (GDPR, ISO 27001, HIPAA, etc.). Without strict protocols, you risk model misuse, data leakage, or non-compliance with local and international standards.

The Solution:

Build a compliance-first framework that safeguards both your organisation and your offshore engineers.

Best practices include:

When managed correctly, offshore prompt engineering can meet the same security benchmarks as in-house AI operations.

The Challenge:

As your library of prompts grows, ensuring consistent tone, accuracy, and performance becomes difficult — especially when multiple engineers contribute to the same codebase or project.

Without proper governance, teams risk duplication, conflicting instructions, or version drift, which degrades AI reliability and brand alignment.

The Solution:

Introduce prompt version control, quality checks, and peer review protocols — similar to how software engineers manage codebases.

Key strategies:

This process ensures prompt reliability, reproducibility, and continuous improvement — critical for scaling enterprise-grade AI applications.

The Challenge:

When working with offshore teams, differences in communication style, time zone, or feedback culture can create friction. Prompt engineering, being highly iterative and nuanced, depends heavily on clear intent alignment — even minor misunderstandings can derail outcomes.

The Solution:

Bridge cultural gaps through structure and shared language of collaboration.

When communication flows freely, offshore prompt engineers evolve from task executors to co-creators of strategic AI outcomes.

The Challenge:

As your offshore AI operations expand, managing multiple prompt engineers, model iterations, and review cycles can slow innovation. What starts as a fast-moving experiment can quickly become bureaucratic and fragmented.

The Solution:

Scale through process automation and modular team design:

This structure allows you to grow capacity without diluting agility or creative flow — the two cornerstones of effective AI innovation.

Building an offshore prompt engineering team comes with challenges — but each one can be mitigated with the right mix of process discipline, cultural alignment, and technical tooling. By combining structured experimentation, secure data practices, and collaborative frameworks, organisations can transform these challenges into competitive advantages.

Offshore prompt engineers, when empowered with the right systems and trust, don’t just keep up with the LLM revolution — they lead it.

At Remote Office, we’ve developed deep expertise in hiring offshore prompt engineers who bring together creativity, technical fluency, and AI domain understanding.

Through our extensive networks across India, the Philippines, and Eastern Europe, we help companies:

✅ Hire pre-vetted prompt engineers skilled in LLMs, automation frameworks, and AI agents

✅ Scale conversational and generative AI initiatives quickly and cost-effectively

✅ Ensure communication and quality control through local account managers

✅ Reduce development costs by up to 70% while accelerating AI delivery

We don’t just connect you with engineers — we help you build sustainable AI capability that powers long-term business growth.

Hiring offshore prompt engineers isn’t just about lowering costs — it’s about unlocking global creativity and technical precision to drive intelligent automation and human-like AI experiences. With the right partner, you can build offshore teams that not only understand how LLMs work — but how to make them work for your unique business goals. If you’re ready to scale your AI initiatives, Remote Office can connect you with world-class offshore prompt engineers who turn language into impact.